Overview for how automated test structured for Status app

As a part of CI for Status mobile app and in order to ensure there are no regressions appear after the changes in code (bug fix, new/updated feature) we are using automated tests (e2e tests).

- Automated tests written on Python 3.6 and pytest.

- Appium (server) and Selenium WebDriver (protocol) are the base of test automation framework.

- TestRail is a test case management system tool where we have test cases. To simplify management of test cases we are making them more-or-less atomised (so particular scenario is dedicated to cover one feature at a time). Each of the test case gets a priority (Critical/High/Medium/Low)

- SauceLabs - is a cloud based mobile application test platform. We are using Android emulators (Android 8.0) for test script execution there. We have 24 session be running at the same time max.

For now we support e2e for Android only.

Whenever we need to push set of test scripts we create 24 parallel sessions and each thread uploads Android .apk file to SauceLabs → runs through the test steps → receives results whether test failed on particular step or succeeded with no errors → Parse test results and push them as a Github comment (if the suite ran against respective PR) and into TestRail.

We push whole test suite against each nightly build (if the nightly builds job succeeded). Results of the test run saves in TestRail.

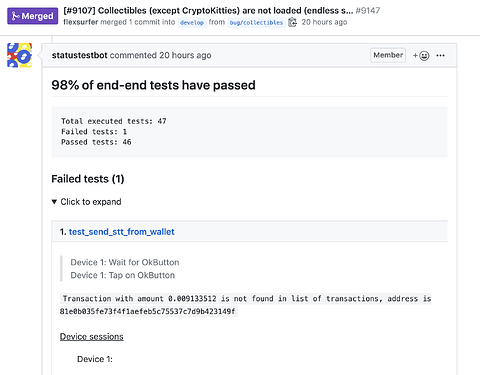

And also we push set of autotests whenever PR with successful builds got moved in to E2E Tests column from Pipeline for QA dashboard (Pipeline for QA · GitHub). In that case we save results in TestRail as well and push a comment with test results in a respective PR.

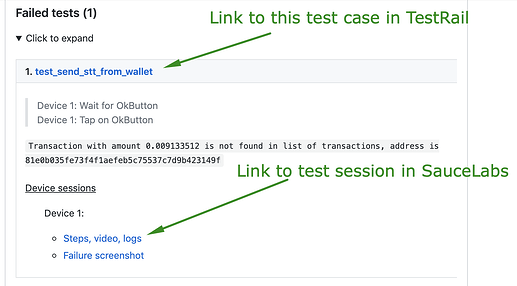

- The test_send_stt_from_wallet opens link in TestRail Login - TestRail where performed steps could be found

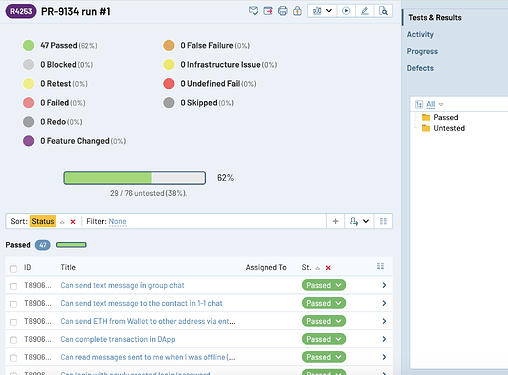

- List of all runs performed by test jobs could be found here Login - TestRail

(login credentials for TestRail available in LastPass, or ping @Sergey or @Chu for details)

Opening any test run navigates you to list of test cases with results:

There are two main jobs in Jenkins responsible for the e2e test suite execution : https://ci.status.im/job/end-to-end-tests/job/status-app-end-to-end-tests/ and https://ci.status.im/job/end-to-end-tests/job/status-app-nightly/, where you can find a list of runs performed for PRs and Nightly develop builds respectively. Also there is an ability to start above jobs manually. Just click ‘Build with Parameters’, specify params (explanation what each param does below each editbox) and hit run. Job should be put in a queue.

One more Jenkins job giving ability to re-run specific tests for PR (usually done against failed tests): https://ci.status.im/job/end-to-end-tests/job/re-run-e2e-tests/build?delay=0sec

You need to provide:

- apk: --apk=[url_to_apk_build_here]

- pr_id: pull request number (e.g. 1234)

- branch: branch name from which the test are taken

- testrail_case_id: here is the list of test cases which you may find in test rail (4-digit value)

Once the job starts it picks up specified tests, runs them against provided apk and sends results to pull request.

Even we have 24 parallel sessions for testing it’s a time consuming operation (whole test suite we have automated at the moment takes ~40 minutes to finish). So for PRs we pick only set of Critical

tests (otherwise some PRs could wait their turn of the scheduled Jenkins job till the next day).

Analysing test results (and why test fails to pass)

After automated test run finished test results could be found in GH comment (if the test suite ran agaist PR) and TestRail. There are two states of the test: Passed and Failed. Test failure happens when curtain condition of test step has not met or automated test can not proceed execution because it can not find the respective element on screen it expects should be there.

Several examples of when test fails to succeed:

- Test clicked on element which should load new screen (or pop-up) and awaits some element on this screen. But test did not wait enough allowing the new screen to appear and so it fails with “Could not find element XYZ” (this case is more app issue in our opinion rather then test issue, but we just can not spend our and dev time with too specific random places which happens once causing app lags in different moments)

- Test sent transaction to address but it was not mined in time (we have a limit to wait until balance is changed on recipient side up to ~6 mins now). We classify this as False Fail, because it’s not the app issue but more network issue.

- Test infrastructure issues, - anything related to infrastructure including SauceLabs side issues (apk failed to install - rare case, or LTE connection was set by default instead WiFi or unexpected pop-up appeared preventing test to going further)

- Failure due to changed feature which has not been taken into account in some test after code merge (for instance: some element on screen has been removed, and we want to locate another element on this screen via XPath which is different now)

- Valid issue in the automated test scripts

For example: here is the test results [#9107] Collectibles (except CryptoKitties) are not loaded (endless s… by flexsurfer · Pull Request #9147 · status-im/status-mobile · GitHub where one test failed. Open the test in TestRail and open session recorded for this test in SauceLabs

- In TestRail you may find all the steps performed by the test.

- In SauceLabs testrun page you may find useful: video of the session, step logs, logcat.log of the session

You may see that last steps of the test was sending transaction. When we send eth/tokens we ping etherscan with the address of recipient confirming that transaction received. If that not happens (during ~6 minutes) test fails. That what apparently happened here.

Limits for e2e tests coverage

Not all features of the app could be covered by e2e at the moment:

- Colours or place of an element on UI.

- Real ETH/token transactions. That’s the main reason we have separate .apk build for automation needs - it defaults to Ropsten network.

- Autologin/Biometric related actions (autologin available when device meets certain conditions like the it has set unlock password and device is not rooted: all emulators are rooted in SauceLabs)

- Camera-related items (no way to scan QR codes with emulators)

- Keycard interactions

Brief flow for test to be automated

Whenever there is a need to have a new test we first create a test scenario in TestRail. If certain item could be checked in scope of existing test case we update existing one (otherwise we may have thousands of test cases which is overkill to manage in TestRail as well as in automated test scripts). And also complex autotests increase probability to not catch regressions by stopping test execution (due to valid bug or changed feature) keeping the rest test steps uncovered. So here we need to balance when it makes sense to update existing test case with more checks.

Then we create test script based on the test case, ensure test passes for the build and pushing the changes to repo.

What’s the plans for automated testing?

- Currently the goal is increasing test script coverage for what we can automate at the moment uncovered by e2e tests from TestRail. Among 260 tests we have 135 already automated, about ~60 could be automated more, the rest test cases are in the scope of ‘Limits for e2e tests coverage’

- Update as much as possible of elements with assigned accessibility-id on it. We already take it a rule for PRs, though many of the elements are still located with XPath (JFYI: XPath is a “fragile” way to locate an element, so the more elements in app have accessibility-id more stable e2e tests are)

What if we have dedicated e2e test before closing an issue as 'Fixed' as one of acceptance criteria now?

We think it makes sense for sure if it’s more critical for app than scenario we have now in queue to cover by automation, then yes it makes sense to be prioritised.

But what can certainly be done in this respect disregard severity of issue is creating a test case in test management and mentioning it in the GHI before it closed (well that’s among acceptance criteria from the recent time in PRs)

Your questions are welcome!