What if we have the first decentralized self detonate messenger?

Example you can set a time or dates (seconds, minutes, or hour) and the message will remove/deleted and can’t retrieve or track back.

That’s cool and exciting like a time bomb ticking ![]()

What if we have the first decentralized self detonate messenger?

Example you can set a time or dates (seconds, minutes, or hour) and the message will remove/deleted and can’t retrieve or track back.

That’s cool and exciting like a time bomb ticking ![]()

I requested this function several times, but there were more important stages of development. But if it would be possible it would be super !!!

This function is actually quite hard to implement. If not impossible, other than on the UI. Due to the way status works.

So TLDR, status is a permissionless network meaning anyone can store messages, this means that there is no way to guarantee that all servers delete the messages I want deleted at a specific time. A user could essentially create a malicious status node that caches all messages that had an expiration time and not delete them, still giving them complete access.

This is impossible to implement “safely” in any messaging application - Status is no different from Telegram or Snapchat in this regard since each of those could choose to not delete their messages (who knows if they actually do) - nonetheless, it’s a useful thing because when you’re not using a “hacked” client, the messages are gone when someone steals or takes your phone.

In Status in particular, we can’t remove the encrypted messages from the network, but we could clear the relevant encryption keys that are stored on your device, which is equivalent to deleting the messages.

The thing you can do is the OTR approach of having unprovable messages, so you set up the encryption scheme so that any message you make can be forged by anyone else after 1 day. Though this is only meaningful in closed groups, as otherwise someone can just make a “trusted sentry” and add it to all chats, which would endorse which messages it saw were correctly authenticated in real time.

In Status in particular, we can’t remove the encrypted messages from the network, but we could clear the relevant encryption keys that are stored on your device, which is equivalent to deleting the messages.

This is already done, encryption keys are discarded as soon as they are used (on both sides), or in case they are not used, they are deleted after a while (depends on the number of keys/ratchet sessions you have).

The thing you can do is the OTR approach of having unprovable messages, so you set up the encryption scheme so that any message you make can be forged by anyone else after 1 day.

Correct me if I am wrong, I believe you are referring to plausible deniability here.

OTR (in addition to the PD granted by deriving the same symmetric key), publishes MAC keys in clear after the recipient has acknowledge it.

Ourselves we use underneath Signa’ls double ratchet, which does not require publishing MACs codes afterward, as they deemed PD in their protocol is a bit stronger than OTR ( Signal >> Blog >> Simplifying OTR deniability. ) and does not require this extra step.

We currently don’t have PD enabled in our protocol (historically we used the whisper chat key to sign messages so it would invalidate that), but it’s all set up to support it.

In theory we could use always an ephemeral ethereum key to send messages, each with a different key, and extract information from the ratchet session you have with the participants. That would require a protocol change (not a big change, as it’s just a matter of pulling the identity from the database, which we store already), and would not be breaking as it’s negotiable.

I would think that enabling PD + UI of self detonating messages is enough for most users.

Essentially that would grant us:

- Only the receiver of the message can tell that the message is coming from the original author, but won’t be able to prove that to a third party (cryptographically , of course the message might contain information that only the sender has access to).

Right, you can do this. But that would require knowing the complete set of receivers ahead of time, so you can implement one of these schemes that prevents proving to third parties. So this would presumably be for private chats only at this point (which seems fine).

nice ![]()

Yes, PD is nice for some things, but sometimes you just don’t want people to find out what’s in the message at all, regardless of the provenance.

Exploding messages are just a pragmatic solution to that. I guess there’s a social contract here, as well as one of privacy hygiene: in the real world, things you say and do “disappear” most of the time and you have to make an effort to “store” them (or a friendly agency will do so on your behalf) - in a chat app, weirdly people have gotten used to that it’s the other way around: you have to explicitly remove things or they will be stored.

I created a design for this along with an issue to keep track of

That is snazzy as hell! Can’t wait to try.

The “auto-delete” is a nice feature, however its impossible for it be enforced by protocol level (how to proof you deleted something? maybe with a trusted execution environment? tricky…), users would have to trust the other user is also running the official version of Status which would delete it.

For this to work out regardless of trust of receiver, we should implement “Plausable Deniability of Sender”, we can engenieer the messaging system to only handshake through public chatids, but just send messages from “unique identities”.

This was already suggested in this post here, which was what brought me here.

Private message sender denialiability: handshake is initialized on public chatids but it’s based on a agreement of consistency.

1. Alice-tempchatid encrypted to bob-publicchatid: Hey Bob, here is alice-publicchatid, send from your tempchatid to me in my publicchatid, the secret code is 2014.

2. Bob-tempchatid encrypted to alice-publicchatid: Hey Alice, here is #bob, you've been expecting my message? The secret code is 2014, if you are really Alice-tempchatid, answer me here from there the secret code 2020.

3. Alice-tempchatid encrypted to Bob-tempchatid: Hey, it's really me! The secret code is 2020! My message to you is: Lorem ipsum dolor sit amet, consectetur adipiscing.

The secret code is not be needed at all, because the tempchatids are a secret code on their own, as only the receipt knows where to answer to. Is just on this script to help visualize the agreement of consistency between the peers.

This keeps the sender denialiability as Bob could always said that never answered that message, however alice knows that she only given the “secret code” to Bob, and if someone answered that is probably Bob, however the world does not know that you really shared the code only with Bob, so the agreement of consistency is between Alice and Bob, that each other know they are talking which eachother, but no other people can confirm that just based on the signature of messages.

Tempchatids could also change every message, simply by rolling over secrets and new chat ids

1. Alice-tempchatid1 encrypted to bob-publicchatid: Hey Bob, here is alice-publicchatid, send from your tempchatid to me in my publicchatid, the secret code is 2014.

2. Bob-tempchatid1 encrypted to alice-publicchatid: Hey Alice, here is #bob, you've been expecting my message? The secret code is 2014, if you are really Alice-tempchatid, answer me here from there the secret code 2020.

3. Alice-tempchatid2 encrypted to Bob-tempchatid1: Hey, it's really me! Your secret code is 2020! My message to you is: "Lorem ipsum dolor sit amet, consectetur adipiscing.". My new secret code is 2016.

4. Bob-tempchatid2 encrypted to Alice-tempchatid2: It's bob again, your secret code is 2016. I just wanted to replay to you: "Thats great news! Thanks". My new secret code is 2018.

This might be desirable as it makes diffcult to understand the network even with hipotetical many keys compromised, however not really needed if the normal forward secrecy is being done, as the initial unlinking is the main concern.

To have messages not auto deleted it would need a modified client, and a modified client would not be trustful at all, so any “attributions of identity” would be worthless to the outer would.

Is very important that all messages in Status have this protection, because in case one user leak messages, they wont be able to proof the source of them. E.g. A user gets phone coerced to reveal the access to their Status database, in this scenario this database would worth nothing in terms of blackmailing secrets within, and could even be faked by the coerced user.

Although this would work best in aggregation of the auto-deletion of the messages, because then in this case all messages are just random senders and receivers, and the access to user keys don’t help anything, as all agreements were based on a mutual agreement of consistency.

Another interesting aspect is whether messages can be used as legal evidence even if plausibly deniable.

That really depends on the judicial system that you are in, but in many cases whether the protocol used underneath is plausibly deniable has no actual impact, and many times messages are used as evidence regardless.

Likely that’s because for most user it’s technically very challenging to actually modify or craft a message to themselves, albeit technically possible.

One way to make them not very effective in a legal setting is to actually give users the ability to edit any message (on their local device), regardless of the sender, although that would be a fairly weird feature.

That’s a great idea, because it makes “fake screenshots” accessible to everyone. If someone wants to make a fake conversation they would find a way, but making it plain easy to everyone makes a fair game to all.

However to fake messages at protocol level, they would need to be reencrypted, perhaps they agree on the same key to talk, and inside of the messages they specify who are who, as it dont matters who is sending the message, if is not the user itself, is the other party, however this method would be only for the self deleting messages? Or perhaps a config in Advanced > Allow edit of messages and make somehow to fake a message with any signer?

In terms of protocol, essentially messages are encrypted with a symmetric encryption key, so both parties have access to it, so either can be forging messages pretending to be the other user (given we stop signing messages as described above), so the process would be fairly simple.

To know who you are talking to, you need two information:

At that point you know that it must have been the other user who sent the message (crucially you can’t prove 2 to anyone else, so you get PD)

So identity is embedded in the symmetric key used essentially.

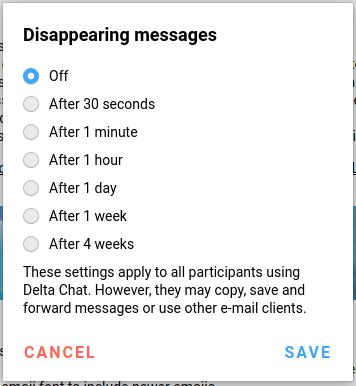

Hi! I really am looking forward to the “self-destruct” feature!

But could you please also add longer deletion timeframes like 1 week and 1 month (and maybe even 6 month)

There are many possible situations where I do not want to have a deletion within 24h. Sometime I just want to make sure that the message does not stay for years on the phones of my communication partner, but I want us to be able

to search like 1 or 2 week old messages with eg a link I have sent.

That being said: Please consider adding many deletion timeframes and also

longer ones!

Thanks!!

Can’t wait to have that feature!

Is the idea still alive? It is a very useful feature and many would love to see it in Status.

@Alice, there has been no activity on the GitHub issue tracking this feature’s development since June. An old roadmap assigned it a due date of 7/31/2020… but I haven’t seen this feature on lists of planned features for a release, so I assume that it isn’t actually a prioritized feature. Status core contributors can only choose to work on so many things at once. I haven’t discovered any links to any roadmaps newer than this one from July, but you can track development of this specific feature at the link @maciej provided earlier in the thread. I also included it below. ![]()

From a cryptographically point of view you can use Attribute-Based Encryption with an attribute being a date to have messages that expire after a certain date.

See this introduction: A Gentle Introduction to Attribute-Based Encryption | by Daniel Kats | Medium

A cryptographer would have to look in the protocol but here are the requirement in terms of software:

In terms of concrete implementation, MCL is being audited right now (audit sponsored by the EF): GitHub - herumi/mcl: a portable and fast pairing-based cryptography library, and does implement one Attribute Based Encryption scheme that can be benchmarked in the browser https://herumi.github.io/mcl-wasm/ibe-demo.html, it is also the speed reference. Associated ABE paper (https://eprint.iacr.org/2014/401.pdf).

One thing I’m unsure of is besides the actually scheme, how to prove that we reached the expiry date and time wasn’t manipulated in a decentralized manner.

A less complex and difficult way is to implement a feature in the “privacy and security” settings in which automatic delete all local messages on the stipulated date. For example, I set to delete all messages after 1 week.

I saw this in an app called “Delta Chat”.

This type of feature, in the mentioned app [DC], works only on the part of those who have stipulated the date of deletion of messages. That is, as in the classic example of Alice and Bob: If Alice activated this on her smartphone, it does not apply to Bob, as it will only be deleted for her [Alice].

I don’t know if it would be ideal, or what people expect from Status in this feature. But as I mentioned: it seems to be less complex to implement.

Follow the lead of apps like Snapchat. You don’t need to guarantee that deletion was done, you should just notify the user when a deletion was not done.

Similar to screenshot taken system message. Could be like message saved system message. And then let the natural human interaction handle the rest. “Hey, why didn’t that disappearing message go away”. Awkward, but so is circumventing a disappearing message! ![]()