Please can someone give me permission to post multiple links/images?

As you may know I am currently experimenting with a concept for chat rooms using a combination of swarm feeds and pss. And right now an idea has come up of enabling “band changes” in the chat updates that only the participants will know of.

Here’s some background on swarm feeds if necessary: https://github.com/ethersphere/go-ethereum/pull/881 and Parallel feed lookups by jpeletier · Pull Request #1332 · ethersphere/swarm · GitHub

I’ll try to keep this simple, some constraints:

- We can exchange session public keys without outside entities associating them with identity used in initial discovery/connect.

- We assume peer discovery and service discovery exists.

- We assume we can promiscuously connect to pss nodes to send and receive in exchange for payment.

- Consensus between peers on who chatroom participants are is out of scope.

Two peers A and B keep one feed each for their single-user chat. The feeds are signed by sender’s session pubkey (PKsesUSER), the feed topic contains the peer’s session ethaddress (ADDR(PKsesPEER)), thus they know how to find them if they know each others’ session keys.

Updates are posted to swarm and the feed is updated by the top swarm hash. Updates point to each others’ respective swarm hashes. Feeds have resolution of 1 second, but since updates are chained swarm content hashes and not feed updates then sub-seconds updates are possible.

PSS is used to notify about an update in chat. When notify is received, peer will start polling for new updates. If no new updates are found within a period, it will stop polling / reduce polling frequency. A new notify resets polling to default frequency.

A single chat simply compiled by adding the output of the respective two feeds. Contents are encrypted by shared secrets, which is handled by separate scheme (can relatively easily implement axolotl-style kdf schemes by passing new encryption pubkeys for every update).

Figure:

http://swarm-gateways.net/bzz:/a511e9bd8cf14ad1582c133e5090c406a761576e1f03cafde6e0312a57d71506/

Multi-user chat rooms / channels are rendered the same way; all participants have their own feed (here the feed topic is seeded by room name), and the chat room is the sum of the updates of all those feeds, signed by respective session pubkeys. Furthermore every peer keeps their own version of the room participant list on a separate feed, and links this list to every update.

Figure:

http://swarm-gateways.net/bzz:/9bf53d82fbe63cee7d328ee62b43547477da2b0ec3cf752bfd96cbe5a44d0eb5/

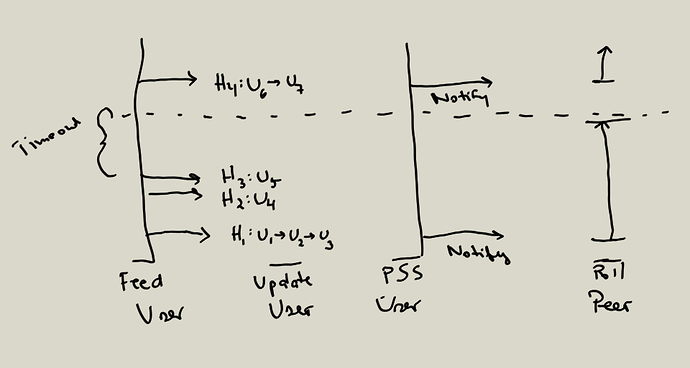

Let’s say that an update can be content but ALSO provide a new feed session pubkey that next update will be posted to. Peers will now consider that feed as the “current” feed and start polling this. Time between jumps can be totally arbitrary.

Figure:

http://swarm-gateways.net/bzz:/e9c3a22cb4845fe2be6f7e798d82b499f5adc535c9a32993787f6fa00b8ef18b/

Now let’s imagine we can do the same with PSS messages; arbitrarily change relay nodes for sending and receiving messages, like a distributed service provider network of sorts. In this way we can obfuscate sender and receiver identity while still keeping the kademlia routing advantages that comes with PSS. The clients would pay to send, pay to receive.

Figure:

http://swarm-gateways.net/bzz:/a164f21add7705c70732ca6344ff5e387affc0d7e24d91cf70de9f1e9d01c8e1/

Some thoughts on good stuff this provides:

- data transmission inherits the security properties of rlpx.

- location of swarm feed chunks inherit the random distribution of normal swarm chunks.

- hard to guess which feed a sender switches to, because the session key could be anything from the key parameter domain.

- hard to link actual conversation activity to notification messages, because they are not one-to-one.

- hard to track the identity of a pss client between nodes, because pss identity keys are also changed.

Whaddyathink?